If you’re working with large datasets in R, you’ve probably seen this error:

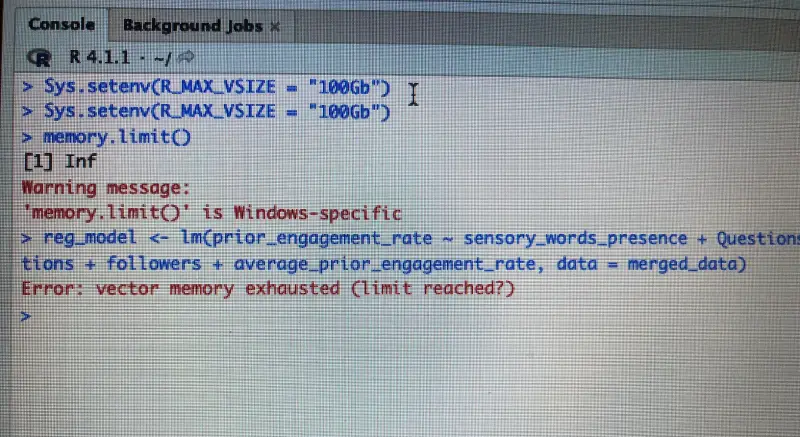

Error: vector memory exhausted (limit reached?)

It’s frustrating, especially when you’re deep data analysis or training a machine learning model.

This error usually comes up when your PC runs out of RAM or when memory allocation fails due to vector size limitations. But the good news is that there are ways to fix it without upgrading your hardware. Yes, it’s read right. Here we give step-by-step methods to solve it.

But before trying the solutions we provide, it’s important to understand and find why you came up with this vector memory exhausted (limit reached ) error. By understanding the causes, you can avoid facing this error again.

Table of Contents

Why Does This Error Happen?

The “error: vector memory exhausted (limit reached?)” is common when handling large datasets, performing complex computations, or working with huge matrices.

These are the common reasons for this error:

1. Insufficient Physical Memory RAM:

Your system doesn’t have enough memory to allocate new vectors (a core data structure).

How to Check:

On Windows: Open Task Manager (Ctrl + Shift + Esc) > Go to the Performance tab > Check Memory Usage.

On Linux: Open the command line > Run grep MemTotal /proc/meminfo to check total RAM.

On Mac: Open Activity Monitor > Click on Memory to see RAM usage.

2. 32-bit vs. 64-bit limitations:

If you’re using a 32-bit system, it can’t allocate more than 4GB of RAM per process.

How to Check:

On Windows: Open cmd > type msinfo32 > Check if your system is 32-bit or 64-bit.

On Linux: Open Terminal > type uname -a > Check output.

On Mac: Launch Terminal > type uname -m > Check output.

If you’re on a 32-bit system, you can’t allocate more than 4GB per process, which could be causing the error.

3. Memory fragmentation:

Your OS might have free RAM, but it’s too scattered to allocate a large contiguous block.

4. Default memory limits in R:

R has an internal limit on memory allocation, especially on Windows.

5. Inefficient data handling:

If it processes millions of rows and columns, then R might not have enough memory to store it. Use data.frame instead of data.table.

6. No garbage collection:

R doesn’t always free up unused memory efficiently. So, use gc() to run garbage collection manually.

How to Solve Vector Memory Exhausted Errors?

While encountering the vector memory exhausted (limit reached?) error can be frustrating, there are several ways to resolve it effectively. Below are some practical solutions for fixing this issue in R, RStudio, and other environments.

1. Increase Memory Limit in R

Before optimizing, check how much memory R is allowed to use. Run:

memory.limit()

By default, Windows limits R to 4GB of RAM, even if your system has more. If you have extra RAM, increase the limit:

memory.limit(size = 16000) # Set to 16GB

In RStudio, you can increase memory settings via Tools > Global Options > General.

Note: This only works on Windows. On macOS and Linux, R uses all available memory by default.

2. Free Up Unused Memory

R doesn’t automatically release memory, so garbage collection is necessary. To Force R to free unused memory:

| Force R to free unused memory | gc() |

| R session is filled with objects you no longer need | rm(list = ls()) # Delete all objects |

| Clear only specific objects | rm(large_dataframe) |

This prevents memory buildup and reduces the chances of hitting the vector memory exhausted error.

3. Use data.table instead of data.frame

If you’re handling large datasets, using data.frame is a bad idea. It duplicates data when performing operations, wasting memory. Switch to data.table, which is optimized for large datasets.

library(data.table)

df <- fread("large_dataset.csv")

Here, fread() is much faster then read.csv() and doesn’t consume unnecessary RAM.

4. Optimize Your Code

Optimizing your code to avoid inefficient memory usage is crucial. Using vectorized operations instead of loops in R can help prevent the vector memory exhaustion issue.

Vectorized operations process entire vectors of data at once, rather than iterating over individual elements. Example of Optimization:

| Inefficient loop | Optimized version |

for(i in 1:length(vec)) { vec[i] <- vec[i] + 1 } | vec <- vec + 1 |

This reduces the need for extra memory allocation and helps resolve the vector memory exhausted (limit reached?) error.

5. Use Smaller Data Types

In R, numeric data is stored as double-precision floating-point numbers, which can consume more memory than necessary.

For large datasets, you can switch to smaller data types, such as integers, to conserve memory. For example:

Convert to integer for smaller memory usage: vec <- as.integer(vec)

Similarly, use logical vectors when dealing with binary data, as these take up significantly less memory than numeric vectors.

6. Sparse Matrices for Large Datasets

When working with large datasets that contain a lot of zeros, consider using sparse matrices to save memory. Sparse matrices only store non-zero elements, significantly reducing memory usage.

library(Matrix)

sparse_matrix <- Matrix(0, nrow = 10000, ncol = 10000, sparse = TRUE)

Here, The Matrix() function from the Matrix package creates a sparse matrix when sparse = TRUE is specified. By default, it initializes all elements to zero, but since it’s sparse, it doesn’t allocate memory for those zeros; only metadata for the structure is stored until non-zero values are added.

This can help resolve errors such as Error: vector memory exhausted (limit reached?) sparse matrix.

7. Avoid Creating Unnecessary Copies of Data

A common mistake in R is creating multiple copies of large datasets. Instead of this:

df2 <- df # Creates a duplicate (wastes memory)

Use data.table, which modifies data by reference without duplication. This saves RAM and prevents the error:

df <- data.table(df)

df[, new_col := old_col * 2] # Modifies in place

8. Increase Virtual Memory (Swap Space)

If you’re running out of physical RAM, increasing swap space can help. On Windows,

- Search for “Advanced System Settings”.

- Go to Performance > Advanced > Virtual Memory.

- Increase the paging file size to double your RAM size.

9. Upgrade to a 64-bit System

If you’re using a 32-bit system, R can’t allocate more than 4GB of RAM. The best solution? Upgrade to a 64-bit OS.

To check your R version:

R.version

If it says “x86_32”, you’re on a 32-bit system. Upgrade to 64-bit R for better memory management.

10. Upgrade Hardware or Use Cloud Solutions

If you continue encountering the r error vector memory exhausted, consider upgrading your hardware. More RAM or a more powerful CPU can help handle larger datasets. Additionally, cloud-based solutions like AWS, Google Cloud, and Microsoft Azure provide scalable resources to process data without hitting memory limits.

For example, if you’re getting an error like error: vector memory exhausted (limit reached?) use a machine with more processing power. Upgrading to a cloud environment with more memory can be a good solution.

Common Specific Cases For RStudio Vector Memory Exhausted (Limit Reached?) Error

| Problem | How To Solve? |

| In R, the regsubsets function from the leaps package can be memory intensive. Using smaller datasets or selecting a subset of predictors can mitigate this issue. | This can occur when creating complex plots that require significant memory for rendering. Optimizing the size and complexity of the data being plotted can help. |

| vector memory exhausted (limit reached) one hot encoding | One hot encoding creates many columns for categorical variables, which can lead to high memory consumption. Reducing the number of categories or using sparse matrices for encoding can resolve this issue. |

| fread error: vector memory exhausted (limit reached?) | This error often happens when trying to load large datasets using fread(). Consider splitting the data into smaller files or reading it in chunks. |

| regsubsets error: vector memory exhausted (limit reached?) | In R, the regsubsets function from the leaps package can be memory-intensive. Using smaller datasets or selecting a subset of predictors can mitigate this issue. |

| error: vector memory exhausted (limit reached?) sql | When working with SQL queries in R, large data transfers from a database can cause memory exhaustion. Limiting the size of the result set or performing more granular queries can help. |

| geom_raster error: vector memory exhausted (limit reached?) | This typically happens in ggplot2 when using geom_raster() to visualize large raster data. Consider simplifying the raster image or using data aggregation to reduce memory load. |

| rds vector memory exhausted” error | The “rds vector memory exhausted” error happens when R tries to read or process a .rds file that is too large for the available memory. |

| r distm error: vector memory exhausted | This error is related to the distm() function in R, which calculates distances between vectors or matrices. When the dataset is too large, R runs out of memory. You can either use a smaller dataset or increase the memory allocation. |

| Error during wrapup: vector memory exhausted (limit reached?) | This error typically happens when R is wrapping up a complex task and cannot allocate enough memory for the final operations. Reducing the size of the dataset or running the process in smaller steps can help resolve this issue. |

Conclusion

The vector memory exhausted (limit reached?) error is a challenging issue that many developers and data analysts face when working with large datasets or performing memory-heavy operations in R or RStudio.

By following the optimization tips, using smaller data types, and employing more efficient libraries, you can effectively prevent this error from occurring.

If you found this blog on resolving errors to be enjoyable, you might also find the following informative articles to be interesting:

- How to solve 500 internal server error (failed to load resource)

- How to Use Rich Snippets in WordPress (3 easy steps)

- ARMember WordPress membership Plugin full Review